Introduction

Free and Open-Source Software and the Linux ecosystem hold real promise in terms of openness and innovation, but they also pose concrete challenges to digital sovereignty when they rely heavily on dependency chains and repositories maintained by third parties. These dependencies expose organizations to supply chain risks, loss of legal control, and obsolescence.

Conversely, proprietary on-premise solutions, particularly Microsoft Windows Server environments and Windows workstations, often offer more immediate operational, hardware, and legal control for businesses and individuals.

Strategic innovation is not just about software and open source; the Windows gaming market has funded hardware breakthroughs — notably the rise of GPUs — which are now benefiting research, weather forecasting and AI.

A pragmatic approach must distinguish between local (on-premise) and remote (cloud) solutions and assess effective governance, not just the “open source” or “proprietary” label.

1. The Dependency Hell in the FOSS ecosystem

- Modern FOSS projects rely heavily on hundreds or even thousands of packages distributed via package managers and online repositories (npm, PyPI, crates.io, etc.).

- They are often maintained by volunteer contributors and hosted on servers whose location and legal status are unknown.

- Many are hosted by individual accounts, without any legal guarantees or verified identity, sometimes without multi-factor authentication.

“Many of the Top 500 packages on our lists are hosted under individual developer accounts. The consequences of such heavy reliance upon individual developer accounts must not be discounted. For legal, bureaucratic, and security reasons, individual developer accounts have fewer protections associated with them than organizational accounts in a majority of cases. While these individual accounts can employ measures like multi-factor authentication (MFA), they may not always do so, leaving individual computing environments more vulnerable to attack. These accounts also do not have the same granularity of permissioning and other publishing controls that organizational accounts do. This means that changes to code under the control of these individual developer accounts are significantly easier to make.”

The Linux Fondation – Census III of Free and Open Source Software – December 2024

- Recent incidents on the npm ecosystem demonstrate the scale of the problem: massively downloaded packages have been compromised and used to spread malware, illustrating the reach of an attack on public repositories and the ability to affect millions of applications upstream and downstream.

- This operational and legal fragility makes digital sovereignty a delicate matter: the disappearance of a repository, the abandonment of a maintainer, or the malicious modification of a package can force unforeseen migrations or break production chains.

- The availability and location of the servers hosting the repositories, as well as the governance model, are therefore highly variable, which complicates any guarantee of continuity or application of sovereignty rules (jurisdiction, storage of metadata, access to logs).

- Recommendations stemming from sector analyses highlight the need for organization-wide governance and inventory of open-source software to manage these risks. Recent studies and summaries show that the FOSS ecosystem is ubiquitous in production environments, but that its maintenance model raises security, maintenance, and scalability issues.

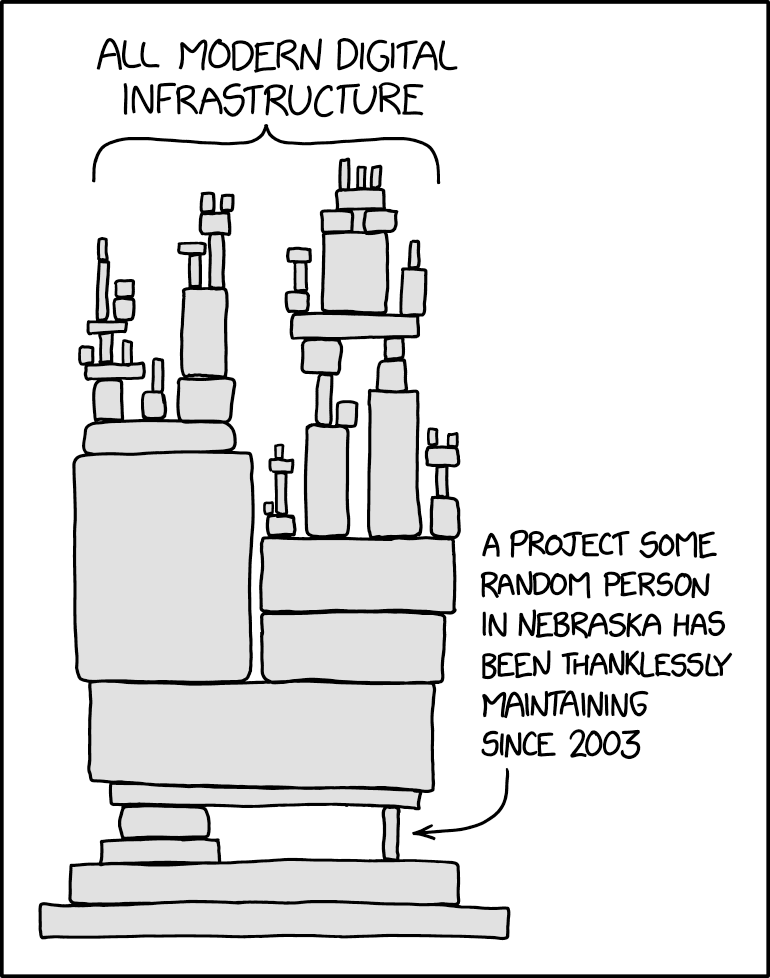

The most emblematic example of the difficulties and risks associated with current FOSS practices is the incredible story of the XZ Utils module compromise discovered in March 2024.

A project, initially personal and a hobby, had over the years become an essential component of certain Linux distributions deployed at the heart of global data centers. The project was maintained on an individual account by a single, isolated person who, suffering from burnout, eventually handed it over to another contributor whose true identity remains completely unknown to this day. This individual injected malicious code into the project, quickly contaminating thousands of servers worldwide via the supply chain. Despite the open-source nature of the code and the supposed abundance of community code reviews, a critical component, widely used by many other developers and deployed at scale, was entrusted in a completely informal manner to an unknown and, moreover, malicious third party. It’s a situation straight out of the xkcd comic.

Sources :

- https://www.linuxfoundation.org/hubfs/LF%20Research/lfr_censusiii_120424a.pdf?hsLang=en

- https://www.trendmicro.com/en_us/research/25/i/npm-supply-chain-attack.html

- XZ Utils: How a backdoor in a Linux component caused fears of the worst

2. Technical dificulties of development under Linux

- Fragmentation of distributions and versions : providing stable binaries for Debian, Ubuntu, Fedora, Red Hat, Arch, and their numerous versions requires specific packaging, testing, and maintainers for each target, complicating the delivery process. ABI breaks due to glibc updates are frequent, which complicates the task of developers and harms the long-term sustainability of packages.

- Fragmentation of graphical environments and toolkits : QT vs. GTK, X11 vs. Wayland, GNOME vs. KDE—makes the user experience, behavior, and dependencies highly variable, pushing many projects towards standardized but remote web interfaces, or resulting in projects that lack a graphical interface and run only from the command line.

- Prevalence of uncompiled tools : scripts, applications distributed in source code form, or those dependent on a package ecosystem (pip, npm, gems) require rebuilding or installing toolchains on the fly.

- Web/cloud development trend : the focus on web applications and browser-accessible frontends reduces the effort required to optimize compiled local executions, which are often more energy-efficient. Increasing prominence of JavaScript and Google APIs in open-source applications.

- Dependence on volunteer maintainers : many critical packages are maintained by one or two people, creating a single point of failure if they abandon the project. These characteristics make development and operation under Linux more demanding in terms of dependency management, packaging, and governance.

Sources :

- Valve Employee: glibc not prioritizing compatibility damages Linux Desktop : r/linux_gaming

- Win32 Is The Only Stable ABI on Linux

- Not breaking userspace: the evolving Linux ABI | 19x

- glibc breaks ABI quite often. Linus has roasted about it openly in the past http… | Hacker News

- Linus Torvalds on why desktop Linux sucks (Youtube video)

3. Consequences for security and digital sovereignty

- Loss of legal and operational control : relying on foreign storage facilities means being subject to local laws, export policies, and access disruptions beyond the control of the sovereign entity (state, company).

- Supply chain attack risk : the compromise of a maintainer’s account, the injection of malicious code into a critical dependency, or the deletion of a package can trigger cascading effects that impact the availability and security of systems.

- Dependence on the network and energy waste : the model of frequent updates and builds increases exchanges with remote data centers, increasing traffic and energy consumption compared to stable, locally compiled binaries.

- Risk of erosion of autonomy during migrations : due to a lack of complete native applications, there is often a shift towards cloud (remote) services, which reduce sovereignty instead of increasing it.

4. Practical comparison: on-premises Windows vs. free Linux ecosystem

- On-premise control : Microsoft Windows Server environments (AD, DHCP, DNS, Exchange, IIS) allow for the complete management of a corporate network and data locally, without necessarily relying on the cloud, offering clear control over the location of services and backups. Windows provides consolidated graphical interfaces and centralized administration tools that are highly valued in enterprise environments. The lack of native solutions under Linux can push organizations towards cloud solutions, which is contrary to the objective of digital sovereignty.

- Centralized administration : The native integration of group management, the ACL model, and nested groups simplifies large-scale administration compared to Linux solutions (SSSD, winbind), which are often more fragmented and limited. Linux, for example, does not support nested groups, making centralized management complex and cumbersome.

- Industry standards : Contrary to the common misconception of a “black box,” Windows adheres to most industry standards (LDAP, Kerberos, SMB, TLS, etc.) and offers broad hardware and software compatibility, facilitating heterogeneous integration in mixed environments. This fosters a rich and diverse software/hardware ecosystem for all digital uses, both for individuals and businesses.

- Powerful, stable, and open development environment : The Visual Studio suite allows for the development of stable, native graphical applications, offers a choice of numerous languages, and also enables the compilation of applications for Android (with .NET MAUI). There is no mandatory app store or commission to pay on Windows, unlike the Apple and Android environments, which do not offer the same level of freedom in development and distribution of applications. The ability to freely and easily develop and distribute software is a cornerstone of digital sovereignty.

- Package longevity : On Windows, runtime libraries (CRT, UCRT, Visual C++ libraries) are designed to be redistributed with the application or installed as side-by-side components, reducing dependence on a single “system” libc and unforeseen updates. Linux solutions, on the other hand, may require recompilation or adjustments with each major change in dependencies. Compiled and packaged Windows applications often have a long operational lifespan and remain functional years after compilation, without relying on volatile repositories and maintainers. This is an asset for security and digital sovereignty, as it simplifies software inventories and audits.

- User experience : On Windows, the desktop experience, file managers, and graphical interfaces (easy file management, software installation) make it easy for the average user to control their data without resorting to remote services. Average users often prefer the simplicity of a file explorer and straightforward installers over command-line interfaces that require searching the internet even for basic tasks. The Linux file system and its directory structure are difficult for the average user to understand, diminishing their digital autonomy.

5. Hardware innovation and the role of Windows in the ecosystem

- Contrary to some misconceptions, digital innovation is not solely about software and open source code. It depends just as much on hardware as it does on software. The evolution of components (CPU, GPU, RAM, storage, cooling) has allowed software to handle increasing volumes of data and parameters.

- Windows is the dominant platform (95.4% market share on Steam in September 2025, compared to 2.68% for Linux and 1.92% for macOS), uniting the industry and facilitating economies of scale and rapid adoption. Video games have been a driving force, and the competition for gaming performance has led to massive gains in CPU/GPU capabilities, which are then leveraged for scientific computing and AI. Virtual reality (VR), which is demanding on GPUs (stereoscopic 3D rendering), is used in engineering and gaming, with a rich PC ecosystem (Windows-compatible headsets, Steam game catalog).

- The Windows ecosystem, through its balance between proprietary technology and compatibility, has united manufacturers, developers, and users, stimulating hardware R&D and widespread adoption. The consumer video game market has pushed the limits of hardware performance, and these advancements have subsequently benefited servers, supercomputers, simulations, virtual reality, and AI. Hardware progress makes increasingly complex software processing possible, used in fields such as weather forecasting and engineering, for example.

- The established players Intel, AMD, and Nvidia, have evolved thanks to the Windows market to address HPC and AI applications. Indirect funding from the consumer market has generated positive externalities across the entire hardware industry.

- The combined importance of hardware and software means that innovation strategies must simultaneously support hardware and software R&D, leverage broad ecosystems to reduce unit costs, and recognize the role of consumer markets (gaming, VR) as a catalyst for technical advancements useful for professional applications.

- Digital progress is interdependent on both hardware and software, and the Windows ecosystem plus the gaming market have accelerated hardware improvements to the benefit of computing as a whole.

Sources :

- https://store.steampowered.com/hwsurvey/Steam-Hardware-Software-Survey-Welcome-to-Steam

- https://www.renaultgroup.com/en/magazine/our-group-news/3d-sketching-a-digital-touch-to-every-drawing/

- https://www.jonpeddie.com/news/super-duper-computer-amd-does-it-again/

- https://www.energy.gov/articles/energy-department-announces-new-partnership-nvidia-and-oracle-build-largest-doe-ai

6. Cloud, Linux and false promises of sustainability

- The distinction between local and remote : confusing Windows (operating system) and Microsoft Cloud (remote service) distorts the debate — one can maintain sovereignty with Windows on-premise or lose sovereignty with cloud services, regardless of the operating system.

- The cloud does not guarantee continuity : loss of access, major incidents, and data center fires do occur; massive cloud infrastructure outages have already happened and demonstrate a centralization of risk that can affect thousands of organizations simultaneously. The massive AWS outage of October 20, 2025, and the incredible loss of 858 TB of public data by the South Korean government due to a fire at the G-Drive government cloud data center, are striking examples.

- Attack surface and exposure surface : Cloud services increase the number of external interfaces and complexity, often offering a larger attack surface than well-hardened on-premise services, including Microsoft 365 cloud services, which are ultimately more exposed than their on-premise counterparts. Phishing attacks can even compromise multi-factor authentication (MFA).

- The cloud is antithetical to digital sovereignty : Although useful, even indispensable for certain exchanges and configurations, the cloud itself represents, to varying degrees, a loss of sovereignty compared to on-premise solutions. It necessarily involves delegating control of a portion of the digital infrastructure to a distant third party and becoming dependent on them to a certain extent. The provider of a cloud solution can unilaterally terminate your access to the service and your data, or impose significant changes to the terms of service (vendor lock-in).

- Long-term sustainability under Linux is not guaranteed : In addition to packages and dependencies that may change or disappear, sometimes entire Linux distributions vanish, leaving users to face complex migrations on their own. The abrupt discontinuation of CentOS by Red Hat is a striking example.

- Linux is not synonymous with absolute security : enterprise distributions and software can be vulnerable to flaws or hacking (e.g., incidents affecting critical distributions or packages), which serves as a reminder that no system is inherently invulnerable.

- Recent figures on Linux infections:

- The Ebury botnet has infected approximately 400,000 Linux servers since 2009, averaging 26,000 infections per year, with 100,000 machines still compromised at the end of 2023.

- Another piece of malware called perfctl has quietly infiltrated thousands of Linux systems for several years, exploiting common configuration errors.

- Overall statistics on malware show a constant increase in infections in businesses, including on Linux systems, with propagation rates reaching 75% in 2022 in some environments.

- The Ebury botnet has infected approximately 400,000 Linux servers since 2009, averaging 26,000 infections per year, with 100,000 machines still compromised at the end of 2023.

Only a comprehensive approach to digital sovereignty, combining on-premise and cloud components, can make a real difference. Don’t assume that the cloud and Linux alone will solve all the challenges of configuring, securing, backing up, scaling, and ensuring the redundancy of IT and digital infrastructures. Carefully evaluate the possibilities for recovering and migrating data and tools from one cloud provider to another. SaaS tools can lead to vendor lock-in that is more difficult to overcome than with on-premise solutions.

Sources :

- https://www.wired.com/story/amazon-explains-how-its-aws-outage-took-down-the-web/

- https://www.chosun.com/english/national-en/2025/10/02/FPWGFSXMLNCFPIEGWKZF3BOQ3M/

- https://www.proofpoint.com/us/blog/threat-insight/microsoft-oauth-app-impersonation-campaign-leads-mfa-phishing

- https://www.cloudflare.com/learning/cloud/what-is-vendor-lock-in/

- https://www.bleepingcomputer.com/news/security/ebury-botnet-malware-infected-400-000-linux-servers-since-2009/

- https://socradar.io/perfctl-campaign-exploits-millions-of-linux-servers-for-crypto-mining-and-proxyjacking/

7. Open source code : less privacy for more security ?

- Code sharing is not a panacea for sovereignty : some sensitive sectors require proprietary components or components audited by qualified third parties; making the code open to everyone is not always desirable or relevant. Data and network traffic are often the main issue: controlling where the data resides, who accesses it, and how it is transmitted is more critical than simply opening up the code; code visibility does not necessarily eliminate operational or legal risks.

- The belief that open-source code allows the community to detect vulnerabilities in a timely manner is not borne out by the facts. Numerous security vulnerabilities in open-source code persist for years before being detected and corrected. Some threats, such as Perfctl (2021) or Ebury (2009!), have been active for years without any reaction from the FOSS community, which remains silent, or even conceals these serious incidents affecting tens of thousands of Linux systems every year.

- Many critical vulnerabilities in widely used FOSS projects are detected by institutional actors or companies rather than by the volunteer community. The Log4j vulnerability was present in the open-source code for all to see since 2013 before being detected only in 2021 by researchers at the e-commerce giant Alibaba. The XZ Utils vulnerability was discovered by a Microsoft engineer.

« Log4j maintainers have been working sleeplessly on mitigation measures; fixes, docs, CVE, replies to inquiries, etc. Yet nothing is stopping people to bash us, for work we aren’t paid for, for a feature we all dislike yet needed to keep due to backward compatibility concerns »

Volkan Yazıcı, Apache Software Foundation – december 10th 2021

Sources :

- Focus on Perfctl: The malware targeting Linux systems

- https://www.bleepingcomputer.com/news/security/ebury-botnet-malware-infected-400-000-linux-servers-since-2009/

- Log4Shell: behind the major vulnerability, the eternal question of support for free software

- XZ Utils: How a backdoor in a Linux component caused fears of the worst

- https://x.com/yazicivo/status/1469349956880408583

8. Dominant FOSS culture : inconsistancies, biases and dogmatism

The risks and difficulties encountered with FOSS development are not solely due to the purely technical challenges of Linux and its dependencies; they also stem from, and are primarily caused by, a biased and misguided technical culture. Why? Because a dominant culture, based on simplistic beliefs and excessive abstraction, has disconnected developers from the realities of hardware, networking, and systems. There is indeed a dogmatic fringe that believes in the “absolute” security of Linux or open-source software, often without understanding modern attack vectors (supply chain attacks, cloud misconfigurations, kernel exploits). Forums are full of simplistic rhetoric like “Windows = spyware, Linux = freedom,” which obscures operational realities. A segment of the web development community (particularly full-stack JavaScript developers) ignores or underestimates disciplines such as network security, system architecture, or permission management. This leads to naive configurations, unencrypted storage, poorly managed CORS, and so on.

Disconnection from IT disciplines :

- Lack of understanding of the network : Few developers understand ACLs, VLANs, firewalls, or segmentation models.

- Ignorance of hardware : CPU, RAM, I/O, microcode, firmware… are gray areas for many. The result: over-provisioning, poor optimization, and physical vulnerabilities.

- Cloud-first, black box : The abstraction of the cloud masks the underlying hardware, network, and system realities. Developers often don’t know where their code is running, on what hardware, or with what security protections.

- The DevOps without DevSec effect : Automation takes precedence over security. CI/CD pipelines deploy code without rigorous control of dependencies or configurations.

The myth of open source superiority :

- Transparency ≠ security : Open source code guarantees nothing without auditing, maintenance, and governance. In practice, many critical projects are under-maintained, with little code review or security testing. The Log4Shell vulnerability remained undetected for years despite the code being open source.

- Dogmatism and Linux myths : Many believe that Linux is “inherently safe,” ignoring kernel vulnerabilities, configuration errors, and physical attacks. Some claims that have been proven false (“There are no malwares on Linux,” for instance) still circulate on forums without being corrected by the community.

- Software-only dogma : The belief that everything is software and that hardware is secondary. The software and its source code alone handle everything (performance, security, functionality, connectivity, etc.) independently of the hardware or network infrastructure.

- The myth of the ethical superiority of free software : The universal sharing of knowledge is a morally noble goal, but the systematic avoidance of professional, paid proprietary solutions in favor of open-source freeware, often originating from personal hobbyist projects, for professional and commercial reuse raises serious ethical and fair competition concerns, as seen in the examples of Red Hat or WordPress.

“One thing you do is prevent good software from beeing written. Who can afford to do professional work for nothing? What hobbyist can put 3-man years into programming, finding all bugs, documenting his product and distribute for free?“

Bill Gates – Letter to hobbyists – febuary 1976

Red Hat vs CentOS :

The open-source approach often presents serious inconsistencies, as illustrated by the abrupt discontinuation of CentOS, a major Linux distribution, by Red Hat, due to competition with its own “open-source” but partially paid products. Red Hat acknowledged that it was impossible for them to maintain CentOS completely free of charge, and that it was overshadowing RHEL, for which paid subscriptions are available.

“CentOS necessarily hampered RHEL sales by being a free, but also functional, alternative.”

Le MagIT – End of life for CentOS 7: what now? – october 11th 2024

“The very existence of a free version of a paid system, when their functions were 100% identical, sowed more confusion than anything else, both for Red Hat’s sales teams and for its customers”

Red Hat, a major player in Linux and the FOSS world, showed no remorse for the millions of individual and business users using CentOS when it decided to protect its own business model, leaving them with no choice but to migrate to a different operating system—a process that can be complicated and which Red Hat is offering as a paid service to the CentOS 7 users it has abandoned : “Red Hat Enterprise Linux for Third Party Linux Migration is a new offering designed to make Red Hat Enterprise Linux more accessible to CentOS Linux users with a competitively priced subscription and a simplified conversion process.“

WordPress vs WP Engine :

Another example is the dispute between WordPress and WP Engine. WordPress, unhappy with WP Engine’s modifications to the source code for its clients, and with the fact that despite significant revenue, WP Engine doesn’t contribute more to the funding and development of WordPress, simply decided to cut off their access to WordPress modules, leaving WP Engine’s customers without security updates for their installed plugins and themes. In addition to this, WordPress went so far as to send messages directly to WP Engine’s customers and even appropriated and “forked” a WP Engine plugin!

This once again highlights the legal ambiguity surrounding the use of open-source code and its ownership, as well as the limits of sovereignty in an open-source ecosystem that relies heavily on online repositories controlled by third parties. There are no enforceable rights, in either direction. This demonstrates the limitations of promoting open source as a lever for growth and sovereignty for digital companies and organizations.

These emblematic cases are the logical consequence of the fundamental problem of remuneration and the sustainability of the free software model. The hypothesis of a world where everything is produced for free ignores the economic reality of maintenance, bug fixing, and security requirements; hybrid models (commercial + open core, OSPOs, public funding) are often more sustainable.

Sources :

- https://en.wikipedia.org/wiki/File:Bill_Gates_Letter_to_Hobbyists_ocr.pdf

- End of life for CentOS 7: what now?

- https://www.redhat.com/en/technologies/linux-platforms/red-hat-enterprise-linux-for-third-party-linux-migration

- WordPress vs WP Engine: a conflict with serious consequences for the CMS ecosystem

- https://wptavern.com/acf-plugin-forked-to-secure-custom-fields-plugin

- https://world.hey.com/dhh/automattic-is-doing-open-source-dirty-b95cf128

9. Digital sovereignty : beware of false friends

- MacOS, although proprietary, is closed both in terms of hardware and software, limiting autonomy.

- Android and iOS collect massive amounts of personal data: photos, contacts, location, browsing history, and much more – far more than Windows 11 (do not confuse personal data with telemetry).

- Google dominates access to information through Search and News, influencing the press and open-source developers.

10. The true criteria of digital sovereignty :

- Software openness : Does the digital solution allow for easy interaction with other third-party solutions? Does it allow me to easily create data, content, and applications that I can freely distribute?

- Hardware openness : The foundation of a sovereign digital solution is physical control of the hardware. Computers, servers, telephones, network equipment. Does the implemented solution allow me to freely choose the hardware infrastructure on which I want to install, run, and store the data (computers, servers, smartphones)?

- Privacy : Does the solution respect my privacy or the confidentiality of my company’s data? What is the nature and volume of the data collected and transmitted to digital solution providers?

- Local autonomy : Does the digital solution allow for completely offline deployment from installation sources? Does it depend on online accounts and services for its installation or use? Some solutions, even those that are on-premise, still require an online account for administration.

- Data extractability : To what extent can I retrieve the data stored on a digital device? (e.g., removing the hard drive from a computer and recovering the data on another device, or accessing a Micro SD card on a smartphone). Some solutions do not allow physical access to the data.

- Data exportability : Does the solution allow me to export the data in a standard format compatible with other competing third-party solutions if I wanted to switch to a different one?

11. Practical recommendations for a pragmatic digital sovereignty

- Choose the tool best suited to the need : Linux for specialized servers and technical flexibility; Windows on-premise for centralized management, interoperability, and operational simplicity; avoid decisions dictated by dogma.

- Prioritize on-premise solutions for strategic functions (directory services, email, critical storage) or choose a sovereign bare-metal hosting solution located within the relevant legal jurisdiction.

- Re-evaluate the true costs (human and financial) of securing Linux and open-source environments in light of security and sovereignty requirements (package maintenance, dependency auditing, advanced configuration, EDR/XDR security solutions).

- Evaluate energy costs and performance of architectures: prioritize locally compiled binaries for intensive use and limit energy-intensive, unoptimized web applications.

- Promote reproducible builds and on-premise mirrors of critical repositories to limit direct dependencies on third-party servers.

- Strengthening open source governance: sustainable funding for maintainers, security audits, policies for managing maintainer accounts, and review of supply chains.

- Mapping dependencies and software inventory: list repositories, critical packages and maintainers, define recovery plans (forks, internal mirrors).

- For individuals concerned about their digital sovereignty and privacy, it’s preferable to use a personal computer to manage software, photos, and personal documents locally (without forgetting to back up important data on other physical media or online), rather than smartphones, which are inherently designed for the collection of personal data and its exploitation in the cloud.

Conclusion

Digital sovereignty is not a binary issue of “open source = sovereign” versus “proprietary = servitude.” It is measured by the control of data, software supply chains, and hardware infrastructure, as well as the ability to govern and finance maintenance. Open-source software under Linux offers significant benefits, but its distributed dependency model and operational fragmentation create real risks to sovereignty if these risks are not actively managed. For many organizations and users, on-premise Microsoft Windows solutions currently offer a pragmatic compromise: interoperability based on standards, unified administration, stable binaries, and legal and physical control of data. Useful innovation does not depend on an “open source” label; it depends on an ecosystem that funds R&D, supports the hardware, and allows for control over the entire digital chain.